Home » Azure (Page 4)

Category Archives: Azure

Keep your Azure subscription tidy with Azure Automation and Log Analytics

When delivering Azure training or Azure engagements there is always a discussion about how important it is to have a policy and a lifecycle for Azure resources. Not only do we need a process to deploy resources to Azure, we also need a process to remove resources. From a cost perspective this is extra important, as an orphan IP address or disk will cost many, even if they are not in use. We also need policy to make sure everything is configured according to company policy. Much can be solved with ARM policies, but not everything. For example, you can’t make sure all resources have locks configured.

To keep the Azure subscription tidy and to get an event/recommendation when something is not configured correctly we can use an Azure Automation and OMS Log Analytics. In this blog post, I will show an example how this can be done 😊 The data flow is

- Azure Automation runbook triggers based on a schedule or manual. The runbook run several checks, for example if there are any orphan disks.

- If there is anything that should be investigated an event is created in OMS Log Analytics.

- In the OMS portal, we can build a dashboard to get a good overview of these events.

The example dashboard shows (down the example dashboard here)

- Total number of recommendations/events

- Number of resource types with recommendations

- Number of resources groups with recommendations. If each resource group correspond to a service, it is easy to see number of services that are not configured according to policy

The runbook is this example checks if there are any disks without an owner, any VMs without automatically shut down, any public IP addresses not in use and databases without lock configured. The runbook is based on PowerShell and it is easy to add more checks. The runbook submit data to OMS Log Analytics with Tao Yang PS module for OMSDataInjection, download here. That show up in Log Analytics as a custom log called ContosoAzureCompliance_CL. The name of the log can be changed in the runbook.

The figure below shows the log search interface in the OMS portal. On the left side, you can see that we can filter based on resource, resource type, severity and resource group. This makes it easy to drill into a specific type of resource or resource group.

Disclaimer: Cloud is very fast-moving target. It means that by the time you’re reading this post everything described here could have been changed completely.

Note that this is provided “AS-IS†with no warranties at all. This is not a production ready solution for your production environment, just an idea and an example.

Process OMS Log Analytic data with Azure Automation

Log Analytic in OMS provides a rich set of data process features for example custom fields. But there are scenarios were the current feature set is not enough.

In this scenario, we have a custom logfile that log messages from an application. From time to time the log file contains information about number of files in an application queue. We would like to display number of files in queue as a graph in OMS. Custom Fields will not work in this scenario as the log entries has many different log entry formats, OMS cannot figure out the structure of the log entries when not all of them follow the same structure. OMS don´t support custom field based on a subquery of the custom log entries, which otherwise could be a solution.

The example (in this blog post) is to ship the data to Azure Automation, process it, and send it back in suitable format to Log Analytics. This can be done in two different ways,

- 1 – Configure a alert rule in Log Analytics to send data to Azure Automation. Azure Automation process the data and send it to OMS as a new custom log

- 2 – Azure Automation connect to Log Analytics and query the data based on a schedule. Azure Automation process the data and send it to OMS as a new custom log

It is important to remember that events in Log Analytics don´t have a ID. Either solution we choose we must build a solution that makes sure all data is processed. If there is an interruption between Log Analytics and Azure Automation it is difficult to track which events that are already processed.

One thing to note is that Log Analytic and Azure Automation show time different. It seems like Azure Automation use UTC when display time properties of the events, but the portal for Log Analytic (the OMS portal) use the local time zone (in my example UTC+2hours). This could be a bit tricky.

1 – A Alert Rule push data to Azure Automation

In this example we need to do configuration both in Azure Automation and Log Analytics. The data flow will be

- Event is inserted into Log Analytics

- Event trigger Alert Rule in Log Analytics that trigger an Azure Automation runbook

- Azure Automation get the data from the webhook and process it

- Azure Automation send back data to Log Analytics as a new custom log

To configure this in Log Analytics and Azure Automation, follow these steps

- In Azure Automation, import AzureRM OperationalInsight PowerShell module. This can be done from the Azure Automation account module gallery. More information about the module here

- Create a new connection of type OMSWorkSpace in the in the Azure Automation account

- Import the example runbook, download from WebHookDataFromOMS

- In the runbook, update OMSConnection name, in the example named OMS-GeekPlayGround

- In the runbook, you need to update how the data is split and what data you would like to send back to OMS. In the example I send back Computer, TimeGenerated and Files to Log Analytic

- Publish the runbook

- In Log Analytics, configure an Alert Rule to trigger the runbook

- Done !

2 – Azure Automation query log analytic

In this example we don´t need to configure anything on the Log Analytic side. Instead all configuration is done on the Azure Automation side. The data flow till be

- Events are inserted into Log Analytic

- Azure Automation query Log Analytic based on a schedule

- Azure Automation get data and process it

- Azure Automation send back data to Log Analytic as a new custom log

To configure this in Azure Automation, follow these steps

- Import Tao Yang PS module for OMSDataInjection into your Azure Automation account. Navigate to PS Gallery and click Deploy to Azure Automation

- Import the AzureRM OperationalInsight PowerShell module. This can be done from Azure Automation account module gallery. More information about the module here.

- Create a new connection of type OMSWorkSpace in the Azure Automation account

- Verify that there is a connection to the Azure subscription that contains the Azure Automation account. In my example the connection is named “AzureRunAsConnection”

- Import the runbook, download here, GetOMSDataAndSendOMSData in TXT format

- In the runbook, update OMSConnection name, in the example named OMS-GeekPlayGround

- In the runbook, update Azure Connection name, in the example named AzureRunAsConnection

- In the runbook, update OMS workspace name, in the example named geekplayground

- In the runbook, update Azure Resource Group name, in the example named “automationresgrp”

- In the runbook, update the Log Analytic query that Azure Automation run to get data, in the example “Type=ContosoTestApp_CL queue”. Also update the $StartDateAndTime with correct start time. In the example Azure Automation collect data from the last hour (now minus one hour)

- In the runbook, you need to update how the data is split and what data you would like to send back to OMS. In the example I send back Computer, TimeGenerated and Files to Log Analytic.

- Configure a schedule to execute the runbook with suitable intervals.

Both solutions will send back number of files in queue as double data type to Log Analytic. One of the benefits of building a custom PowerShell object and convert it to JSON before submitting it to Log Analytic, is that you can easy control data type. If you simple submit data to Log Analytic the data type will be detected automatically, but sometimes the automatic data type is not what you except. With the custom PS object you can control it. Thanks to Stan for this tip. The data will be stored twice in Log Analytic, the raw data and the processed data from Azure Automation.

Disclaimer: Cloud is very fast moving target. It means that by the time you’re reading this post everything described here could have been changed completely.

Note that this is provided “AS-IS†with no warranties at all. This is not a production ready solution for your production environment, just an idea and an example.

Upload VHD and create new VM with managed disk

In this post I would like to share scripts and steps I used to create a new Azure VM with managed disks based on a uploaded Hyper-V VHD file. There is a number of things to do before uploading the VHD to Azure. Microsoft Docs has a good checklist here with steps how-to prepare a Windows VM to be uploaded to Azure. Some of the most important things to think about is that the disk must have a fixed size, be in VHD format and the VM must be generation 1. It is also recommended to enable RDP (😊) and install the Azure VM Agent. The overall steps are

- Create a Azure storage account

- Prepare the server according to the link above

- Export the disk in VHD format with fixed size

- Build a new VM with the exported disk. This is not required, but can be a good thing to do just to verify that the exported VHD file works before uploaded to Azure

- Upload the VHD file

- Create a new VM based on the VHD file

- Connect to the new VM and verify everything works

- Delete the uploaded VHD file

The following figure show the storage account configuration were the VHD file is stored

The following image show the upload process of the VHD file… the last image show creation of the new VM

The following image show the upload process of the VHD file… the last image show creation of the new VM

The script I used to upload the VHD file

Login-AzureRmAccount -SubscriptionId 9asd3a0-aaaaa-4a1c-85ea-4d11111110be5$localfolderpath = “C:\Export”

$vhdfilename = “LND-SRV-1535-c.vhd”

$rgName = “migration-rg”

$storageaccount = “https://migration004.blob.core.windows.net/upload/”$localpath = $localfolderpath + “\” + $vhdfilename

$urlOfUploadedImageVhd = $storageaccount + $vhdfilename

Add-AzureRmVhd -ResourceGroupName $rgName -Destination $urlOfUploadedImageVhd -LocalFilePath $localpath -OverWrite

The script I used to create the new VM. Note that the script connects the new VM to the first subnet on a VNET called CONTOSO-VNET-PRODUCTION. Also note that the size of the VM is set to Standard_A2.

Login-AzureRmAccount -SubscriptionId 9asd3a0-aaaaa-4a1c-85ea-4d11111110be5$vmName = “LND-SRV-1535”

$location = “West Europe”

$rgName = “migration-rg”

$vhdfile = “LND-SRV-1535-c”

$vhdsize = “25”

$sourceVHD = “https://migration004.blob.core.windows.net/upload/” + $vhdfile + “.vhd”### Create new managed disk based on the uploaded VHD file

$manageddisk = New-AzureRmDisk -DiskName $vhdfile -Disk (New-AzureRmDiskConfig -AccountType StandardLRS -Location $location -CreateOption Import -SourceUri $sourceVHD -OsType Windows -DiskSizeGB $vhdsize) -ResourceGroupName $rgName### Set VM Size

$vmConfig = New-AzureRmVMConfig -VMName $vmName -VMSize “Standard_A2”### Get network for new VM

$vnet = Get-AzureRMVirtualNetwork -Name CONTOSO-VNET-PRODUCTION -ResourceGroupName CONTOSO-RG-NETWORKING

$ipName = $vmName + “-pip”

$pip = New-AzureRmPublicIpAddress -Name $ipName -ResourceGroupName $rgName -Location $location -AllocationMethod Dynamic

$nicName = $vmName + “-nic1”

$nic = New-AzureRmNetworkInterface -Name $nicName -ResourceGroupName $rgName -Location $location -SubnetId $vnet.Subnets[0].Id -PublicIpAddressId $pip.Id

$vm = Add-AzureRmVMNetworkInterface -VM $vmConfig -Id $nic.Id### Set disk

$vm = Set-AzureRmVMOSDisk -Name $manageddisk.Name -ManagedDiskId $manageddisk.Id -CreateOption Attach -vm $vm -Windows### Create the new VM

New-AzureRmVM -ResourceGroupName $rgName -Location $location -VM $vm

Finding correct permissions for custom roles in Azure RBAC

In Azure you can assign user, group and services access to different resources. It is of course a good idea to assign as exact permissions as possible to each user, group or service. Too many permissions mean that for example a user can affect more resources than planned and expose a larger attack surface. Too few permissions mean that the user can’t do their job in an effective way. With Azure Role-Based Access Control (RBAC) you can assign each user, group or service only the amount of permissions they need to perform their job. Azure RBAC comes with many built-in roles, for more information about the built-in roles click here.

In some scenarios, the built-in roles are not suitable to the scenario. That is when we can build our own roles 😊 Custom roles can be assigned users, groups and services, just like the built-in roles. They can also be shared among all subscriptions that use the same Azure AD. I wrote a blog post about custom roles in October, Setting up team permissions with custom RBAC role and ARM policy. That blog post cover everything around getting started with custom roles.

But sometimes it is difficult to find correct operations for your custom roles. For example, in this scenario, a user is assigned the built-in Contributor role to a resource group. The user can then create/edit/delete any resource within the resource group, but cannot delete the resource group or change access to it. But the user also need permissions to administrate locks on resources in the resource group. Â There is no lock administrator role built-in instead we need to build it.

Finding correct Azure operations for locks administration is made easy with the following Powershell script

$collection = Get-AzureRmProviderOperation *

foreach ($item in $collection)

{

$desc = $item.Description

If ($desc -like "*locks*")

{

$item.Operation

}

}

The script goes through all providers and operations in Azure and checks for each operation that have “locks†in the description.

We can then use the locks operations we just found to build a new custom RBAC role, described in the blog post around RBAC from October (link earlier in this post). As you can see in the figure below the role is assigned to the entire subscription. By doing this it is easy to use the role on multiple places within the subscription.

In this blog post we have seen how we can find operators for Azure custom RBAC roles. Each operator control a task or action that a user, group or service can do. Assigning the correct amount of permissions to a role will both increase security and the possibilities to effectively work. Try to use groups instead of users when assigning permissions, as you would do in your Windows Server Active Directory. Assigning permissions to groups instead of users will make administration easier.

Inside Microsoft Azure IaaS [free e-book]

A long time ago in a galaxy… far far away…

We started to work on this book early last year I think it was. It has been a lot of changes, updates, new features and services in Azure. But now finally it is public 🙂 The book provides a hands-on guide to utilizing Infrastructure-as-a-Service (IaaS) resources in Azure, with a primary focus on Azure Virtual Machines. Additional content covered in the book includes Azure PowerShell, Azure Virtual Networking, Azure Storage, Connecting Azure to your Datacenter, Migration, and Backup & Disaster Recovery.

Authors are Ryan Irujo, Janaka Rangama, Pete Zerger and me.

Download your copy of the free e-book here.

happy reading 🙂

Setting up team permissions with custom RBAC role and ARM policy

A common Azure infrastructure scenario is that subscription administrator setup one resource group per service/application. Service administrators or application administrators then are assigned permissions to the resource group and all resources within it. For a long time, it has been a challenge to limit what the service administrators could do within the resource group. For example, if you assigned them the permission to create a virtual machine they could create any size of virtual machine and name it anything they like. Another challenges have been two wide default security roles or two narrowed, for example if you assign service administrators the contributor role they could create any kind of resources within the resource group, but the virtual machine contributor role will not give permissions to work with public IP addresses for their services.

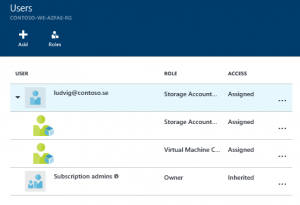

In this blogpost we will look into how ARM Policies and custom RBAC (Role Based Access Control) can be used to control what type of resources and how they are created within the resource group. In my example I have a resource group named CONTOSO-WE-AZFAE-RG. The naming convention is based on [ORGANIZATION]-[LOCATION West Europe in this example]-[service/workload in this example Azure Financial Analysis Engine]-[Azure Resource type in this example resource group].

The first thing is to assign permissions to the service administrators. In this scenario I want the service administrators team to have permissions to administrate everything around their virtual machines, including storage accounts, public IP addresses but not networking. The service administrators should also have permissions to connect (join) virtual machines to an existing virtual network hosted in another resource group. Azure Role-Based Access Control (RBAC) comes with a large number of security roles. Looking at the requirements in my scenario and the default roles, Virtual Machine contributor and Storage Account contributor are the two best options. I added the user account (could add a group too) as a virtual machine contributor and storage account contributor on the resource group level.

Now if ludvig@contoso.se, one of the service administrators, is trying to create a new virtual machine, including a new public IP and a Network Security Group, the deployment fails. This is due to the fact that the he only has permissions to virtual machines and storage accounts in the CONTOSO-WE-AZFAE-RG resource group. The Virtual Machine Contributor role have access to join machines to a subnet (Microsoft.Network/virtualNetworks/subnets/join/action) but the challenge in this scenario the VNET is hosted in another resource group. The Virtual Machine Contributor role is only for resources in the current resource group. Another challenge is that neither the Storage Account Contributor or the Virtual Machine contributor roles gives the user any permissions for public IP addresses or network security groups.

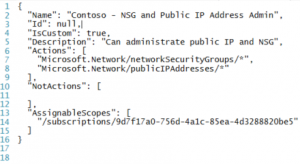

To give the service administrators team permissions to create and administrator both public IP addresses and Network Security Groups we will create a new custom security role, as none of the built-in security roles meet the scenario requirements. We will also create a new custom security role to give the service administrator permissions to join virtual machines to the VNET. Just like built-in roles, custom roles can be assigned to users or groups and applications at subscription, resource group or resource scopes. Building custom security roles are described here. In this scenario we will build two custom security roles, the first to handle NSG and public IP permissions and second custom security role to handle join virtual machines to VNET.

This is the definition of the first role.

This is the definition of the second role.

To import these roles into Azure, save the definitions as for example TXT files, then run use the New-AzureRMRoleDefination Azure PowerShell cmdlet to import the file. For example, New-AzureRmRoleDefinition -InputFile ‘C:\Azure\CustomRoleOne.txt’

Once both roles are imported, assign the user these roles. The “Contoso – Read and Join VNET†role must be assigned to the users on the resource group that contains the VNET that virtual machines will be connected to.

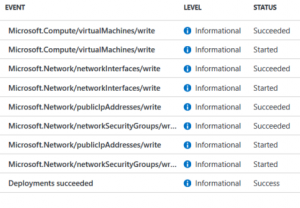

A service administrator can now deploy a new virtual machine, including network security group and public IP address, in the CONTOSO-WE-AZFAE-RG resource group. A virtual machine can also be attached/connected to a VNET in another resource group, handled with the new “Contoso – Read and Join VNET†security role.

All good so far J

But the challenge now is that service administrators can create a virtual machine of any size and can also give the server any name. All servers should start with “AZFAE-SRV†and use a VM size from the D-family. To solve this, we will use ARM policies. With policies we can focus on resources actions, for example restrict locations or settings on a resource. If you think about this scenario we use RBAC to control what actions a user can do on virtual machines, network security groups, public IP addresses and storage accounts. We can add policies to control how these resources are provisioned, controlling the settings, for example to make sure virtual machines are always deployed in west Europe. More information about policies here.

The following script setup a new policy that restrict virtual machine name. The last three lines of the script assign the new policy to the CONTOSO-WE-AZFAE-RG resource group.

The following script setup a new policy that restrict virtual machine size. The last three lines of the script assign the new policy to the CONTOSO-WE-AZFAE-RG resource group.

If one of the Service Administrators now try to create a virtual machine with a size not D1, D2, D3 or D4 an error will occur during deployment, saying that the deployment action is disallowed by one or more policies.

Summary: We have now a mix of standard RBAC roles and custom RBAC roles to setup the permissions our service administrators needed. We then use ARM policies to control HOW resources are configured and deployed. The next challenge would be control how much resources the service administrator deploys. RBAC gives us control of WHAT the administrator do, ARM policies gives us control of HOW they do it. But there is no mechanism to control how many resources. For example, the service administrator in this blog post example can now deploy hundreds of D servers, if they have correct name and size. Â This could be solved with an external self-service portal including approval steps , but that is out of scope for this blog post J

If you want to review policy related events, you can use the Get-AzureRMLog cmdlet and look for Microsoft.Authorization/policies events, see here for more examples.

If you want to keep track of all access changes you can use the Get-AzureRMAuthorizationChangeLog cmdlet to access the change history log. For example “Get-AzureRMAuthorizationChangeLog -StartTime ([DateTime]::Now – [TimeSpan]::FromDays(7)) | FT Caller,Action,RoleName,PrincipalType,PrincipalName,ScopeType,ScopeName†will give you a list of all access changes last week. More information about this log here.

Disclaimer: Cloud is very fast moving target. It means that by the time you’re reading this post everything described here could have been changed completely .

Review Azure Automation jobs with PowerBI

In the Azure Portal, under a Azure Automation account, we can review automation jobs for example number of successfully jobs last seven days. This is all good, but let’s say we need to know which service do we spend most automation minutes on? What source start most runbook jobs? How many minutes did each job take? Which jobs ran on a specific hybrid worker group? In this blogpost I will show an example of how this can be accomplished with PowerBI (PowerBI is an analytics service from Microsoft), a Azure SQL database and a runbook 🙂

All automation job data can be read with PowerShell, including some information we don’t see in the portal. This information can then be written, with PowerShell, to an Azure SQL database that PowerBI reads. We can then user PowerBI to drill down into the automation job data. This example includes two major steps

- A scheduled PowerShell based Azure Automation runbook gets all Azure Automation job data from the Azure Automation account. The data is in some case modified by PowerShell, for example some characters are replaced before they are stored in the database. PowerShell also calculate minutes spent per runbook job based on Start and End time from the runbook job data. The last part of the runbook writes the job data to the Azure SQL database

- PowerBI is configured to use the Azure SQL database as data source. PowerBIÂ reads the data and present it in a web based dashboard that you can configure/design any way you want. In the figure below you can see an example of the PowerBI dashboard. On the right side of the figure you can see different parameters that can be used to filter the data and drill deeper into it.

In the figure below I have selected OMS as technology and all the other fields are adapted to only show OMS related information, for example only workers that have run a OMS related runbook.

If you look at the PowerBI figure you can see that we can filter on technology and service. This is based on tags configured on each runbook. The runbook in step one export this data too and writes it to the Azure SQL database. With these tags we can group runbooks together, based on owner, technology, service, integration or any other way we need to group them. In the figure below you can see how tags are configured on each runbook. If you run the example runbook with no tags on your runbooks the data export will work anyway, just that in PowerBI you will see “Not Configured” as service, technology and type of runbook.

Summary: We use a Azure Automation runbook to write automation job data to a Azure SQL database. PowerBI then reads the Azure SQL database and present the data in a easy way. You can then use PowerBI to drill down into the data

Note that this is provided “AS-IS†with no warranties at all. This is not a production ready solution for your production environment, just an idea and an example.

Download the example runbook here. Download SQL script to setup the database here.

Document Azure subscription with PowerShell

I would like to share an idea around documentation for Azure subscription, and hopefully get some ideas and feedback about it. What I see at customers is that documenting what resources are deployed to Azure is a challenge. It is also a challenge to easy get an overview of configuration and settings. Fortunately with Azure Power Shell we can easily get information about all resources in Azure. I have built an example script that export some settings from Azure and write them to a Word document.

The example script will export information about Virtual Machines, Network Interfaces and Network Security Groups (NSG). If you look in the script you can see examples of reading data from Azure and writing it to the Word document. You could of course read any data from your Azure environment and document it to Word. A benefit with a script is that you can schedule the script on intervals to always have an updated documentation of all your Azure resources.

Another thing I was testing was building Visio drawings with PowerShell. To do this I used this PowerShell module. The idea with this example is to read data from Azure and then draw a picture. In my example I included virtual machines and related storage accounts and network.

Download my example PowerShell scripts here.

Note that this is provided “AS-IS” with no warranties at all. This is not a production ready solution for your production environment, just an idea and an example.

Monitoring Azure Backup Server with Microsoft Operations Management Suite

In this post I would like to share some ideas around monitoring Azure Backup Server and backup jobs with Microsoft Operations Management Suite (OMS). OMS comes with a solution for Azure Backup. With this solution I can see that the Azure Backup vault protect 3 servers and is using a total of X GB. If I click on “3 registered servers” I can see that these three servers are my Azure Backup Servers. The machines that are being protected by the backup servers are not shown. As a backup administrator you often need to know more than number of backup servers and used space. In this blog post I will show you how to collect and visualize that information with OMS J

The first thing to do is to install the OMS agent on the Azure Backup Server. Once the agent installation is successfully completed it is time to configure OMS to collect DPM events. Add the DPM Backup Events, DPM Alerts and CloudBackup event logs under Settings/Data. But before any events are written to these event logs, Azure Backup Server needs to be configured to publish backup events and alerts. This configuration is done in the Microsoft Azure Backup console, in the Management workspace under Options.

Once backup related events are starting to come in to OMS it is time to configure filters to visualize what we want to see. The following filter will get all successfully backup jobs. Event ID 33222 is successful backup job and event id 33223 is failed backup job.

Type=Event EventLevelName=information EventID = 33222 TimeGenerated>NOW-8HOURS | sort Computer

But as you can see in the figure all values in the computer column is the Azure Backup Server. I would like to see what data source was protected and also on which server. To do this, you can use custom fields in OMS. With custom fields we can extract data from the event and index it as a new fields.

In the next two figures I have extracted protected server and data source from 33222 event and 33223 events, from the ParameterXML parameters. As you can see, we now have a column for the protected server and one column for the data source. We could combine this to one filter, showing both failed and successfully jobs. But I think it is better with two filters when we start using these filters in My Dashboard.

We could also run a query like this to get all machines and latest successfully backup

It can also be interesting with a filter to show all protected servers that don’t have a successful backup for last X hours. In my lab environment I have some events from before I extracted fields from the events, as you can see below.

Once we have our filters and saved them to favorites we can use them in My Dashboard. We now have a quick start overview of out backup jobs on the Azure Backup Server. Of course you can add a number of filters to get more information to your dashboard.

Free E-book: Inside the Microsoft Operations Management Suite

Tao (@MrTaoYang), Stan (@StanZhelyazkov), Pete (@pzerger) and I have been working on a project for the last few weeks. We wanted to bring a learning resource for the MS Operations Management Suite to the community that is complete, comprehensive, concise…and free (as in beer). While we finish final editing passes over the next couple of weeks, we wanted to share an early copy of the book so you can start digging in while we finish our work!

Description: This preview release of “Inside the Microsoft Operations Management Suite†is an end-to-end deep dive into the full range of Microsoft OMS features and functionality, complete with downloadable sample scripts (on Github). The chapter list in this edition is shown below:

- Chapter 1: Introduction and Onboarding

- Chapter 2: Searching and Presenting OMS Data

- Chapter 3: Alert Management

- Chapter 4: Configuration Assessment and Change Tracking

- Chapter 5: Working with Performance Data

- Chapter 6: Process Automation and Desired State Configuration

- Chapter 7: Backup and Disaster Recovery

- Chapter 8: Security Configuration and Event Analysis

- Chapter 9: Analyzing Network Data

- Chapter 10: Accessing OMS Data Programmatically

- Chapter 11: Custom MP Authoring

- Chapter 12: Cross Platform Management and Automation

Download your copy here!

Detect all SQL Server cluster resources

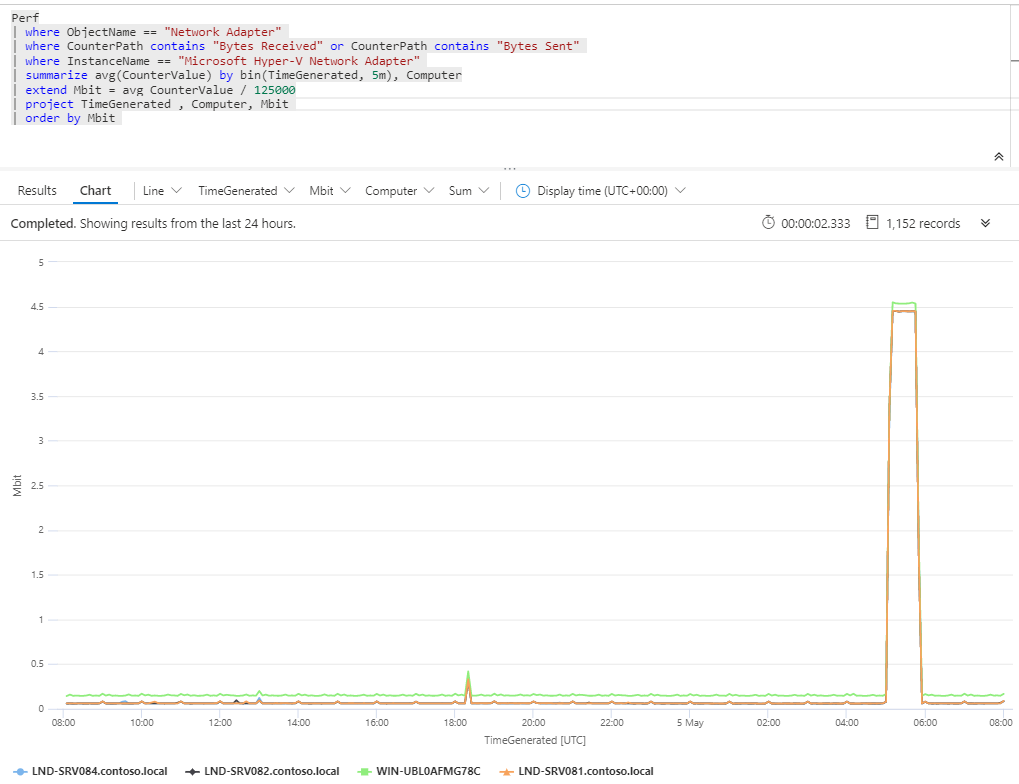

Detect all SQL Server cluster resources Measure bandwidth with Azure Monitor

Measure bandwidth with Azure Monitor

Recent Comments