Home » Scripts

Category Archives: Scripts

Trigger a runbook based on an Azure Monitor alert, and pass alert data to the runbook

Last week Vanessa and I worked on a scenario to trigger Azure automation based on Azure Monitor alerts. We notice the lack of documentation around this, so we thought we could share our settings. We will not go into recommended practices around trigger automation jobs for faster response and remediation. Still, we would recommend you read the Management Baseline chapter in the Cloud Adoption Framework, found here. Enhanced management baseline in Azure – Cloud Adoption Framework | Microsoft Docs. The chapter covers designing a management baseline for your organization and how to design enhancements, such as automatic alert remediation with Azure Automation.

The scenario is that a new user account is created in Active Directory. A data collection rule collects the audit event of the new user account. An alert rule triggers an alert based on the latest event and triggers an Azure Automation Runbook.

The blog post will show how to transfer data from the alert to the runbook, such as information about the new user account.

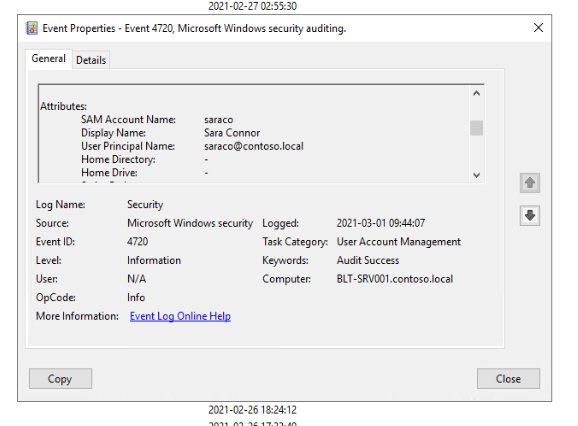

A new user account is created, named Sara Connor.

A security event is generated in the audit log.

The event is collected and sent to Log Analytics by a data collection run.

An alert rule runs every five minutes to look for newly created accounts. The alert rule triggers the runbook. Note that the alert rule uses the Common Alert Schema to forward event information.

Information about the common alert schema at Microsoft Docs. Below is the query used in the alert rule, and the runbook code.

Event

| where EventLog == "Security"

| where EventID == "4720"

| parse EventData with * 'SamAccountName">' SamAccountName '' *

| parse EventData with * 'UserPrincipalName">' UserPrincipalName '' *

| parse EventData with * 'DisplayName">' DisplayName '' *

| project SamAccountName, DisplayName, UserPrincipalName

Runbook:

param

(

[Parameter (Mandatory=$false)]

[object] $WebhookData

)

# Collect properties of WebhookData.

$WebhookName = $WebhookData.WebhookName

$WebhookBody = $WebhookData.RequestBody

$WebhookHeaders = $WebhookData.RequestHeader

# Information on the webhook name that called This

Write-Output "This runbook was started from webhook $WebhookName."

# Obtain the WebhookBody containing the AlertContext

$WebhookBody = (ConvertFrom-Json -InputObject $WebhookBody)

Write-output "####### New User Created #########" -Verbos

Write-Output "Username: " $WebhookBody.data.alertContext.SearchResults.tables.rows[0] -Verbos

Write-Output "Display Name: " $WebhookBody.data.alertContext.SearchResults.tables.rows[1] -Verbos

Write-Output "User UPN: " $WebhookBody.data.alertContext.SearchResults.tables.rows[2] -Verbos

This is the output from the runbook, including details about the new user account.

Visualize Service Map data in Microsoft Visio

A common question in data center migration scenarios is dependencies between servers. Service Map can be very valuable in this scenario, visualizing TCP communication between processes on different servers.

Even if Service Map provides a great value we often hear a couple of questions, for example, visualize data for more than one hour and include more resources/servers in one image. Today this is not possible with the current feature set. But all the data needed is in the Log Analytics workspace, and we can access the data through the REST API 🙂

In this blog post, we want to show you how to visualize this data in Visio. We have built a PowerShell script that export data from the Log Analytics workspace and then builds a Visio drawing based on the information. The PowerShell script connects to Log Analytics, runs a query and saves the result in a text file. The query in our example lists all connections inbound and outbound for a server last week. The PowerShell script then reads the text file and for each connection, it draws it in the Visio file.

In the image below you see an example of the output in Visio. The example in the example we ran the script for a domain controller with a large number of connected servers, most likely more than the average server in a LOB application. In the example you can also see that for all connections to Azure services, we replace the server icon with a cloud icon.

Of course, you can use any query you want and visualize the data any way you want in Visio. Maybe you want to use different server shapes depending on communication type, or maybe you want to make some connections red if they have transferred a large about data.

In the PowerShell script, you can see that we use server_m.vssx and networklocations.vssx stencil files to find servers and cloud icons. These files and included in the Microsoft Visio installation. For more information about the PowerShell module used, please see VisioBot3000.

THE SOFTWARE IS PROVIDED “AS IS”, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

SOFTWARE.

From Service Map to Network Security Group

Many data center migration scenarios include moving from a central firewall to multiple smaller firewalls and network security groups. A common challenge is how to configure each network security group (NSG). What should be allowed?

One way to map out which traffic to allow is using Service Map, as shown in previous blog posts. It is also possible to take it one step further, by automatically reading Service Map data from Log Analytics and building NSG rules based on the collected data.

To show an example of this, we have put together a PowerShell script. The script reads Service Map data for a specific server and builds an NSG and NSG rules based on the read data. The NSG is then attached to the server’s network adapter. Download the script here.

Of course, there are some risks with this; for example, if there is an “evil process†running on the server and communicating on the network, then there will be an NSG rule for this too. Also, the Service Map will only collect data for TCP traffic, not UDP, and the script expects the server to already exist in Azure. You will not be able to use this script to create NSG rules for servers that have not been migrated.

Thanks to Vanessa for good conversation and ideas 🙂

Disclaimer: Cloud is a very fast-moving target. It means that by the time you’re reading this post, everything described here could have been changed completely. The blog post is provided “AS-IS†with no warranties.

Update Service Map groups with PowerShell

Service Map automatically discovers application components on Windows and Linux systems and maps the communication between services. With Service Map, you can view your servers in the way that you think of them: as interconnected systems that deliver critical services. Service Map shows connections between servers, processes, inbound and outbound connection latency, and ports across any TCP-connected architecture, with no

A common question is how to update machine groups in Service Map automatically. Last week my colleague Jose Moreno and I was worked with Service Map and investigated how to automate machine group updates. The result was a couple of PowerShell examples, showing how to create and maintain machine groups with PowerShell. You can find all the examples on Jose GitHub page. With these scripts we can now use a source, for example, Active Directory groups, to set up and update machine groups in Service Map.

Process OMS Log Analytic data with Azure Automation

Log Analytic in OMS provides a rich set of data process features for example custom fields. But there are scenarios were the current feature set is not enough.

In this scenario, we have a custom logfile that log messages from an application. From time to time the log file contains information about number of files in an application queue. We would like to display number of files in queue as a graph in OMS. Custom Fields will not work in this scenario as the log entries has many different log entry formats, OMS cannot figure out the structure of the log entries when not all of them follow the same structure. OMS don´t support custom field based on a subquery of the custom log entries, which otherwise could be a solution.

The example (in this blog post) is to ship the data to Azure Automation, process it, and send it back in suitable format to Log Analytics. This can be done in two different ways,

- 1 – Configure a alert rule in Log Analytics to send data to Azure Automation. Azure Automation process the data and send it to OMS as a new custom log

- 2 – Azure Automation connect to Log Analytics and query the data based on a schedule. Azure Automation process the data and send it to OMS as a new custom log

It is important to remember that events in Log Analytics don´t have a ID. Either solution we choose we must build a solution that makes sure all data is processed. If there is an interruption between Log Analytics and Azure Automation it is difficult to track which events that are already processed.

One thing to note is that Log Analytic and Azure Automation show time different. It seems like Azure Automation use UTC when display time properties of the events, but the portal for Log Analytic (the OMS portal) use the local time zone (in my example UTC+2hours). This could be a bit tricky.

1 – A Alert Rule push data to Azure Automation

In this example we need to do configuration both in Azure Automation and Log Analytics. The data flow will be

- Event is inserted into Log Analytics

- Event trigger Alert Rule in Log Analytics that trigger an Azure Automation runbook

- Azure Automation get the data from the webhook and process it

- Azure Automation send back data to Log Analytics as a new custom log

To configure this in Log Analytics and Azure Automation, follow these steps

- In Azure Automation, import AzureRM OperationalInsight PowerShell module. This can be done from the Azure Automation account module gallery. More information about the module here

- Create a new connection of type OMSWorkSpace in the in the Azure Automation account

- Import the example runbook, download from WebHookDataFromOMS

- In the runbook, update OMSConnection name, in the example named OMS-GeekPlayGround

- In the runbook, you need to update how the data is split and what data you would like to send back to OMS. In the example I send back Computer, TimeGenerated and Files to Log Analytic

- Publish the runbook

- In Log Analytics, configure an Alert Rule to trigger the runbook

- Done !

2 – Azure Automation query log analytic

In this example we don´t need to configure anything on the Log Analytic side. Instead all configuration is done on the Azure Automation side. The data flow till be

- Events are inserted into Log Analytic

- Azure Automation query Log Analytic based on a schedule

- Azure Automation get data and process it

- Azure Automation send back data to Log Analytic as a new custom log

To configure this in Azure Automation, follow these steps

- Import Tao Yang PS module for OMSDataInjection into your Azure Automation account. Navigate to PS Gallery and click Deploy to Azure Automation

- Import the AzureRM OperationalInsight PowerShell module. This can be done from Azure Automation account module gallery. More information about the module here.

- Create a new connection of type OMSWorkSpace in the Azure Automation account

- Verify that there is a connection to the Azure subscription that contains the Azure Automation account. In my example the connection is named “AzureRunAsConnection”

- Import the runbook, download here, GetOMSDataAndSendOMSData in TXT format

- In the runbook, update OMSConnection name, in the example named OMS-GeekPlayGround

- In the runbook, update Azure Connection name, in the example named AzureRunAsConnection

- In the runbook, update OMS workspace name, in the example named geekplayground

- In the runbook, update Azure Resource Group name, in the example named “automationresgrp”

- In the runbook, update the Log Analytic query that Azure Automation run to get data, in the example “Type=ContosoTestApp_CL queue”. Also update the $StartDateAndTime with correct start time. In the example Azure Automation collect data from the last hour (now minus one hour)

- In the runbook, you need to update how the data is split and what data you would like to send back to OMS. In the example I send back Computer, TimeGenerated and Files to Log Analytic.

- Configure a schedule to execute the runbook with suitable intervals.

Both solutions will send back number of files in queue as double data type to Log Analytic. One of the benefits of building a custom PowerShell object and convert it to JSON before submitting it to Log Analytic, is that you can easy control data type. If you simple submit data to Log Analytic the data type will be detected automatically, but sometimes the automatic data type is not what you except. With the custom PS object you can control it. Thanks to Stan for this tip. The data will be stored twice in Log Analytic, the raw data and the processed data from Azure Automation.

Disclaimer: Cloud is very fast moving target. It means that by the time you’re reading this post everything described here could have been changed completely.

Note that this is provided “AS-IS†with no warranties at all. This is not a production ready solution for your production environment, just an idea and an example.

Upload VHD and create new VM with managed disk

In this post I would like to share scripts and steps I used to create a new Azure VM with managed disks based on a uploaded Hyper-V VHD file. There is a number of things to do before uploading the VHD to Azure. Microsoft Docs has a good checklist here with steps how-to prepare a Windows VM to be uploaded to Azure. Some of the most important things to think about is that the disk must have a fixed size, be in VHD format and the VM must be generation 1. It is also recommended to enable RDP (😊) and install the Azure VM Agent. The overall steps are

- Create a Azure storage account

- Prepare the server according to the link above

- Export the disk in VHD format with fixed size

- Build a new VM with the exported disk. This is not required, but can be a good thing to do just to verify that the exported VHD file works before uploaded to Azure

- Upload the VHD file

- Create a new VM based on the VHD file

- Connect to the new VM and verify everything works

- Delete the uploaded VHD file

The following figure show the storage account configuration were the VHD file is stored

The following image show the upload process of the VHD file… the last image show creation of the new VM

The following image show the upload process of the VHD file… the last image show creation of the new VM

The script I used to upload the VHD file

Login-AzureRmAccount -SubscriptionId 9asd3a0-aaaaa-4a1c-85ea-4d11111110be5$localfolderpath = “C:\Export”

$vhdfilename = “LND-SRV-1535-c.vhd”

$rgName = “migration-rg”

$storageaccount = “https://migration004.blob.core.windows.net/upload/”$localpath = $localfolderpath + “\” + $vhdfilename

$urlOfUploadedImageVhd = $storageaccount + $vhdfilename

Add-AzureRmVhd -ResourceGroupName $rgName -Destination $urlOfUploadedImageVhd -LocalFilePath $localpath -OverWrite

The script I used to create the new VM. Note that the script connects the new VM to the first subnet on a VNET called CONTOSO-VNET-PRODUCTION. Also note that the size of the VM is set to Standard_A2.

Login-AzureRmAccount -SubscriptionId 9asd3a0-aaaaa-4a1c-85ea-4d11111110be5$vmName = “LND-SRV-1535”

$location = “West Europe”

$rgName = “migration-rg”

$vhdfile = “LND-SRV-1535-c”

$vhdsize = “25”

$sourceVHD = “https://migration004.blob.core.windows.net/upload/” + $vhdfile + “.vhd”### Create new managed disk based on the uploaded VHD file

$manageddisk = New-AzureRmDisk -DiskName $vhdfile -Disk (New-AzureRmDiskConfig -AccountType StandardLRS -Location $location -CreateOption Import -SourceUri $sourceVHD -OsType Windows -DiskSizeGB $vhdsize) -ResourceGroupName $rgName### Set VM Size

$vmConfig = New-AzureRmVMConfig -VMName $vmName -VMSize “Standard_A2”### Get network for new VM

$vnet = Get-AzureRMVirtualNetwork -Name CONTOSO-VNET-PRODUCTION -ResourceGroupName CONTOSO-RG-NETWORKING

$ipName = $vmName + “-pip”

$pip = New-AzureRmPublicIpAddress -Name $ipName -ResourceGroupName $rgName -Location $location -AllocationMethod Dynamic

$nicName = $vmName + “-nic1”

$nic = New-AzureRmNetworkInterface -Name $nicName -ResourceGroupName $rgName -Location $location -SubnetId $vnet.Subnets[0].Id -PublicIpAddressId $pip.Id

$vm = Add-AzureRmVMNetworkInterface -VM $vmConfig -Id $nic.Id### Set disk

$vm = Set-AzureRmVMOSDisk -Name $manageddisk.Name -ManagedDiskId $manageddisk.Id -CreateOption Attach -vm $vm -Windows### Create the new VM

New-AzureRmVM -ResourceGroupName $rgName -Location $location -VM $vm

Monitor a Minecraft server with OMS (including moonshine perf counters)

From time to time I play Minecraft with friends. As a former SCOM geek I have of course configured monitoring for this server :) The server in this blogpost is a Windows server but most of the example works the same for a Minecraft server running on Linux. On the Minecraft server there are two types of resources that I would like to monitor, server performance and Minecraft logs.

The first part, server performance, is easy to solve. I installed the OMS agent on the server and enabled Windows performance monitoring for processor, memory, disk queue and network traffic. Those are all out of the box OMS features.

For Minecraft there is a log file, %Minecraft%\logs\latest.log, that Minecraft use to log everything around “the world” running in the server. In this log file you can see players joined, disconnected and some player activity like achievements or if a player dies. You can also use the log file to see if the server is running and if the world is ready. In OMS under Settings/Data/Custom Logs you can configure OMS to collect data from this log file. Note the name of the custom log, as it is the type you use to search for this events. In my example I have setup custom log named WinMinecraftLog_CL (_CL is added automatically). More info about configure custom log here.

We can use Log Search to review collected data (Type=WinMinecraftLog_CL) from the log file. Custom Fields can be used to add a new searchable field for the log severity, in this example OMS extract WARN and INFO and store it as WinMinecraftLogSeverity_CF. More information about custom fields here.

Another interesting thing to monitor on a Minecraft server is number of connected players. Unfortunately the Minecraft server don’t have a performance counter for this or an easy way to read it from the server. But you can count number of connections on the Minecraft port (default port 25565) 🙂 I have created a PowerShell script to count number of connections and write it as a new performance counter to the local server. The script also count number of unique players that have logged on to the server (number of files in the %Minecraft%\world\playerdata folder) and writes it as a performance counter. The script can be download here, WritePerfData. Thanks to Michael Repperger for the perf count example.

These two performance counters can then be collected by OMS as Windows Performance counters

Once all data is collected, both Minecraft specific and server data, OMS View Designer can be used to build a Minecraft dashboard (more info about View Designer here) The dashboard in gives us an overview of the Minecraft server, both from performance and Minecraft perspective. This example dashboard also includes a list of events from the log file, showing if there is a lot of warning events in the log file. Each tile in the dashboard is a link to OMS Log Search that can be used to drill deeper into the data.

Next step could be to index and measure more fun World specific number, for example achievements and most dangerous monster in the Minecraft world 🙂 On the server there is a folder, %Minecraft%\world\stats , with numbers about each user in the world, for example number of threes cut down or blocks built, these could also be fun numbers to collect 🙂

Disclaimer: Cloud is very fast moving target. It means that by the time you’re reading this post everything described here could have been changed completely.

Document Azure subscription with PowerShell

I would like to share an idea around documentation for Azure subscription, and hopefully get some ideas and feedback about it. What I see at customers is that documenting what resources are deployed to Azure is a challenge. It is also a challenge to easy get an overview of configuration and settings. Fortunately with Azure Power Shell we can easily get information about all resources in Azure. I have built an example script that export some settings from Azure and write them to a Word document.

The example script will export information about Virtual Machines, Network Interfaces and Network Security Groups (NSG). If you look in the script you can see examples of reading data from Azure and writing it to the Word document. You could of course read any data from your Azure environment and document it to Word. A benefit with a script is that you can schedule the script on intervals to always have an updated documentation of all your Azure resources.

Another thing I was testing was building Visio drawings with PowerShell. To do this I used this PowerShell module. The idea with this example is to read data from Azure and then draw a picture. In my example I included virtual machines and related storage accounts and network.

Download my example PowerShell scripts here.

Note that this is provided “AS-IS” with no warranties at all. This is not a production ready solution for your production environment, just an idea and an example.

Add network card to Azure VM

This is a script I wrote when Pete and I were preparing our System Center Universe session a couple of Days ago. The scenario is that you have a VM running in Azure with one network card. Now you want to add another network card. It is only possible to add a network card when creating the VM. This script will delete the current VM, keep the disk, create a new VM with two network card and attach the disk again.

Things to add to this script can be how to handle VM size. Depending on the VM size you can have different number of network cards, for example if you have 8 CPU cores you can have 4 network cards. It would be good if the script could handle that. It would also be good if the script could handle multiple disk on the original VM. That might show up in vNext 🙂

$VMName = “SCU001”

$ServiceName = “SCU001”

$InstanceSize = “ExtraLarge”

$PrimarySubNet = “Subnet-1”

$SecondarySubnet = “Subnet-2”

$VNET = “vnet001”#Get the current disk

$disk = Get-AzureDisk | where {$_.AttachedTo -like “*RoleName: $VMName*”}### Shutdown the VM

Get-AzureVM -Name $VMName -ServiceName $ServiceName | Stop-AzureVM -Force# Remove the VM but keep the disk

Remove-AzureVM -Name $VMName -ServiceName $ServiceName# Deploy a new VM with the old disk

$vmconf = New-AzureVMConfig -Name $VMName -InstanceSize $InstanceSize -DiskName $disk.DiskName |

Set-AzureSubnet -SubnetNames $PrimarySubNet |

Add-AzureNetworkInterfaceConfig -name “Ethernet2” -SubnetName $SecondarySubnet |

Add-AzureEndpoint -Protocol tcp -LocalPort 3389 -PublicPort 3389 -Name “Remote Desktop”New-AzureVM -ServiceName $ServiceName -VNetName $vnet -VMs $vmconf

List all containers in all storage accounts

This script list all containers in all storage accounts, in your Azure subscription. It is handy when looking for a container or a blob end point.

$accounts = Get-AzureStorageAccount

Foreach ($account in $accounts)

{

$sa = Get-AzureStorageAccount -StorageAccountName $account.storageAccountName

$saKey = Get-AzureStorageKey -StorageAccountName $sa.StorageAccountName

$ctx = New-AzureStorageContext -StorageAccountName $sa.StorageAccountName -StorageAccountKey $saKey.Primary

Get-AzureStorageContainer -Context $ctx

}Â

Finding correct permissions for custom roles in Azure RBAC

Finding correct permissions for custom roles in Azure RBAC

Recent Comments