Home » Runbook

Category Archives: Runbook

Custom availability monitoring

What if you need to monitor something that require a custom script? For example a sequence of checks? That is possible with a PowerShell script that do all the checks, and then submit the result to the workspace. In this example I will monitor port 25565 on a Minecraft server with a PowerShell script. Monitoring a network port is possible with the network insight feature, but it is still a good example as you can change the PowerShell script do do almost anything.

The first step was to create an Azure Automation runbook to check if the port is open. The runbook submit the result to Log Analytics through the data collector API.

A challenge with the runbook is schedules only allow it to run once per hour. To trigger the runbook more often, for example every five minutes, we can trigger it from a Logic Apps.

The PowerShell based runbook is now triggered to run every five minutes, sending its result to the Log Analytic workspace. Once the data is in the workspace, we can query it with a query, and for example show availability of the network port. As you can see on line six in the query, I have comment (for demo purpose) the line that shows only events from yesterday. The following blog post, Return data only during office hours and workdays , explains the query in details.

let totalevents = (24 * 12);

Custom_Port_CL

| extend localTimestamp = TimeGenerated + 2h

| where Port_s == "Minecraft Port"

| where Result_s == "Success"

// | where localTimestamp between (startofday(now(-1d)) .. endofday(now(-1d)) )

| summarize sum01 = count() by Port_s

| extend percentage = (todouble(sum01) * 100 / todouble(totalevents))

| project Port_s, percentage, events=sum01, possible_events=totalevents

The query will show percentage availability based on one event expected every five minutes.

Trigger a runbook based on an Azure Monitor alert, and pass alert data to the runbook

Last week Vanessa and I worked on a scenario to trigger Azure automation based on Azure Monitor alerts. We notice the lack of documentation around this, so we thought we could share our settings. We will not go into recommended practices around trigger automation jobs for faster response and remediation. Still, we would recommend you read the Management Baseline chapter in the Cloud Adoption Framework, found here. Enhanced management baseline in Azure – Cloud Adoption Framework | Microsoft Docs. The chapter covers designing a management baseline for your organization and how to design enhancements, such as automatic alert remediation with Azure Automation.

The scenario is that a new user account is created in Active Directory. A data collection rule collects the audit event of the new user account. An alert rule triggers an alert based on the latest event and triggers an Azure Automation Runbook.

The blog post will show how to transfer data from the alert to the runbook, such as information about the new user account.

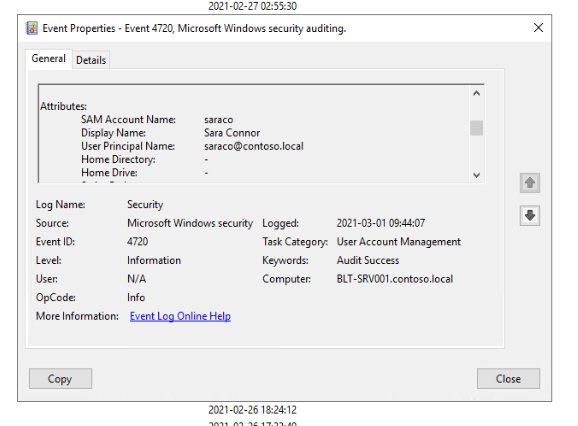

A new user account is created, named Sara Connor.

A security event is generated in the audit log.

The event is collected and sent to Log Analytics by a data collection run.

An alert rule runs every five minutes to look for newly created accounts. The alert rule triggers the runbook. Note that the alert rule uses the Common Alert Schema to forward event information.

Information about the common alert schema at Microsoft Docs. Below is the query used in the alert rule, and the runbook code.

Event

| where EventLog == "Security"

| where EventID == "4720"

| parse EventData with * 'SamAccountName">' SamAccountName '' *

| parse EventData with * 'UserPrincipalName">' UserPrincipalName '' *

| parse EventData with * 'DisplayName">' DisplayName '' *

| project SamAccountName, DisplayName, UserPrincipalName

Runbook:

param

(

[Parameter (Mandatory=$false)]

[object] $WebhookData

)

# Collect properties of WebhookData.

$WebhookName = $WebhookData.WebhookName

$WebhookBody = $WebhookData.RequestBody

$WebhookHeaders = $WebhookData.RequestHeader

# Information on the webhook name that called This

Write-Output "This runbook was started from webhook $WebhookName."

# Obtain the WebhookBody containing the AlertContext

$WebhookBody = (ConvertFrom-Json -InputObject $WebhookBody)

Write-output "####### New User Created #########" -Verbos

Write-Output "Username: " $WebhookBody.data.alertContext.SearchResults.tables.rows[0] -Verbos

Write-Output "Display Name: " $WebhookBody.data.alertContext.SearchResults.tables.rows[1] -Verbos

Write-Output "User UPN: " $WebhookBody.data.alertContext.SearchResults.tables.rows[2] -Verbos

This is the output from the runbook, including details about the new user account.

Inside Azure Management [e-book]

We are excited to announce the Preview release of Inside Azure Management is now available, with more than 500 pages covering many of the latest monitoring and management features in Microsoft Azure!

March 27, 2019] We are excited to announce the Preview release of Inside Azure Management is now available, with more than 500 pages covering many of the latest monitoring and management features in Microsoft Azure!

This FREE e-book is written by Microsoft MVPs Tao Yang, Stanislav Zhelyazkov, Pete Zerger, and Kevin Greene, along with Anders Bengtsson.

Description: “Inside Azure Management” is the sequel to “Inside the Microsoft Operations Management Suite”, featuring end-to-end deep dive into the full range of Azure management features and functionality, complete with downloadable sample scripts.

The chapter list in this edition is shown below:

- Chapter 1 – Intro

- Chapter 2 – Implementing Governance in Azure

- Chapter 3 – Migrating Workloads to Azure

- Chapter 4 – Configuring Data Sources for Azure Log Analytics

- Chapter 5 – Monitoring Applications

- Chapter 6 – Monitoring Infrastructure

- Chapter 7 – Configuring Alerting and notification

- Chapter 8 – Monitor Databases

- Chapter 9 – Monitoring Containers

- Chapter 10 – Implementing Process Automation

- Chapter 11 – Configuration Management

- Chapter 12 – Monitoring Security-related Configuration

- Chapter 13 – Data Backup for Azure Workloads

- Chapter 14 – Implementing a Disaster Recovery Strategy

- Chapter 15 – Update Management for VMs

- Chapter 16 – Conclusion

Download your copy here

Exporting Azure Resource Manager templates with Azure Automation, and protecting them with Azure Backup

Earlier this week I put together a runbook to backup Azure Resource Manager (ARM) templates for existing Resource Groups. The runbook exports the resource group as a template and saves it to a JSON file. The JSON file is then uploaded to an Azure File Share that can be protected with Azure Backup.

The runbook can be downloaded from here, PS100-ExportRGConfig. The runbook format is PowerShell. The runbook might require an Azure PS module upgrade. I have noticed that in some new Azure Automation accounts, the AzureRM.Resources module doesn’t include Export-AzureRmResourceGroup and needs an update.

Inside of the runbook, you need to configure the following variables:

- Resourcegrouptoexport , this is the Resource Group you would like to export to a JSON file.

- storageRG, this is the name of the Resource Group that contains the file share you want to upload the JSON file to.

- storageAccountName, this is the name of the storage account that contains the Azure file share.

- filesharename, this is the name of the Azure file share in the storage account. On the Azure file share, there needs to be a directory named templates. You will need to create that directory manually.

When you run the runbook you might see warning messages. There might be some cases where the PowerShell cmdlet fails to generate some parts of the template. Warning messages will inform you of the resources that failed. The template will still be generated for the parts that were successful.

Once the JSON file is written to the Azure File Share you can protect the Azure file share with Azure Backup. Read more about backup for Azure file shares here.

Disclaimer: Cloud is a very fast-moving environment. It means that by the time you’re reading this post everything described here could have been changed completely. Note that this is provided “AS-IS†with no warranties at all. This is not a production ready solution for your production environment, just an idea, and an example.

Keep your Azure subscription tidy with Azure Automation and Log Analytics

When delivering Azure training or Azure engagements there is always a discussion about how important it is to have a policy and a lifecycle for Azure resources. Not only do we need a process to deploy resources to Azure, we also need a process to remove resources. From a cost perspective this is extra important, as an orphan IP address or disk will cost many, even if they are not in use. We also need policy to make sure everything is configured according to company policy. Much can be solved with ARM policies, but not everything. For example, you can’t make sure all resources have locks configured.

To keep the Azure subscription tidy and to get an event/recommendation when something is not configured correctly we can use an Azure Automation and OMS Log Analytics. In this blog post, I will show an example how this can be done 😊 The data flow is

- Azure Automation runbook triggers based on a schedule or manual. The runbook run several checks, for example if there are any orphan disks.

- If there is anything that should be investigated an event is created in OMS Log Analytics.

- In the OMS portal, we can build a dashboard to get a good overview of these events.

The example dashboard shows (down the example dashboard here)

- Total number of recommendations/events

- Number of resource types with recommendations

- Number of resources groups with recommendations. If each resource group correspond to a service, it is easy to see number of services that are not configured according to policy

The runbook is this example checks if there are any disks without an owner, any VMs without automatically shut down, any public IP addresses not in use and databases without lock configured. The runbook is based on PowerShell and it is easy to add more checks. The runbook submit data to OMS Log Analytics with Tao Yang PS module for OMSDataInjection, download here. That show up in Log Analytics as a custom log called ContosoAzureCompliance_CL. The name of the log can be changed in the runbook.

The figure below shows the log search interface in the OMS portal. On the left side, you can see that we can filter based on resource, resource type, severity and resource group. This makes it easy to drill into a specific type of resource or resource group.

Disclaimer: Cloud is very fast-moving target. It means that by the time you’re reading this post everything described here could have been changed completely.

Note that this is provided “AS-IS†with no warranties at all. This is not a production ready solution for your production environment, just an idea and an example.

Process OMS Log Analytic data with Azure Automation

Log Analytic in OMS provides a rich set of data process features for example custom fields. But there are scenarios were the current feature set is not enough.

In this scenario, we have a custom logfile that log messages from an application. From time to time the log file contains information about number of files in an application queue. We would like to display number of files in queue as a graph in OMS. Custom Fields will not work in this scenario as the log entries has many different log entry formats, OMS cannot figure out the structure of the log entries when not all of them follow the same structure. OMS don´t support custom field based on a subquery of the custom log entries, which otherwise could be a solution.

The example (in this blog post) is to ship the data to Azure Automation, process it, and send it back in suitable format to Log Analytics. This can be done in two different ways,

- 1 – Configure a alert rule in Log Analytics to send data to Azure Automation. Azure Automation process the data and send it to OMS as a new custom log

- 2 – Azure Automation connect to Log Analytics and query the data based on a schedule. Azure Automation process the data and send it to OMS as a new custom log

It is important to remember that events in Log Analytics don´t have a ID. Either solution we choose we must build a solution that makes sure all data is processed. If there is an interruption between Log Analytics and Azure Automation it is difficult to track which events that are already processed.

One thing to note is that Log Analytic and Azure Automation show time different. It seems like Azure Automation use UTC when display time properties of the events, but the portal for Log Analytic (the OMS portal) use the local time zone (in my example UTC+2hours). This could be a bit tricky.

1 – A Alert Rule push data to Azure Automation

In this example we need to do configuration both in Azure Automation and Log Analytics. The data flow will be

- Event is inserted into Log Analytics

- Event trigger Alert Rule in Log Analytics that trigger an Azure Automation runbook

- Azure Automation get the data from the webhook and process it

- Azure Automation send back data to Log Analytics as a new custom log

To configure this in Log Analytics and Azure Automation, follow these steps

- In Azure Automation, import AzureRM OperationalInsight PowerShell module. This can be done from the Azure Automation account module gallery. More information about the module here

- Create a new connection of type OMSWorkSpace in the in the Azure Automation account

- Import the example runbook, download from WebHookDataFromOMS

- In the runbook, update OMSConnection name, in the example named OMS-GeekPlayGround

- In the runbook, you need to update how the data is split and what data you would like to send back to OMS. In the example I send back Computer, TimeGenerated and Files to Log Analytic

- Publish the runbook

- In Log Analytics, configure an Alert Rule to trigger the runbook

- Done !

2 – Azure Automation query log analytic

In this example we don´t need to configure anything on the Log Analytic side. Instead all configuration is done on the Azure Automation side. The data flow till be

- Events are inserted into Log Analytic

- Azure Automation query Log Analytic based on a schedule

- Azure Automation get data and process it

- Azure Automation send back data to Log Analytic as a new custom log

To configure this in Azure Automation, follow these steps

- Import Tao Yang PS module for OMSDataInjection into your Azure Automation account. Navigate to PS Gallery and click Deploy to Azure Automation

- Import the AzureRM OperationalInsight PowerShell module. This can be done from Azure Automation account module gallery. More information about the module here.

- Create a new connection of type OMSWorkSpace in the Azure Automation account

- Verify that there is a connection to the Azure subscription that contains the Azure Automation account. In my example the connection is named “AzureRunAsConnection”

- Import the runbook, download here, GetOMSDataAndSendOMSData in TXT format

- In the runbook, update OMSConnection name, in the example named OMS-GeekPlayGround

- In the runbook, update Azure Connection name, in the example named AzureRunAsConnection

- In the runbook, update OMS workspace name, in the example named geekplayground

- In the runbook, update Azure Resource Group name, in the example named “automationresgrp”

- In the runbook, update the Log Analytic query that Azure Automation run to get data, in the example “Type=ContosoTestApp_CL queue”. Also update the $StartDateAndTime with correct start time. In the example Azure Automation collect data from the last hour (now minus one hour)

- In the runbook, you need to update how the data is split and what data you would like to send back to OMS. In the example I send back Computer, TimeGenerated and Files to Log Analytic.

- Configure a schedule to execute the runbook with suitable intervals.

Both solutions will send back number of files in queue as double data type to Log Analytic. One of the benefits of building a custom PowerShell object and convert it to JSON before submitting it to Log Analytic, is that you can easy control data type. If you simple submit data to Log Analytic the data type will be detected automatically, but sometimes the automatic data type is not what you except. With the custom PS object you can control it. Thanks to Stan for this tip. The data will be stored twice in Log Analytic, the raw data and the processed data from Azure Automation.

Disclaimer: Cloud is very fast moving target. It means that by the time you’re reading this post everything described here could have been changed completely.

Note that this is provided “AS-IS†with no warranties at all. This is not a production ready solution for your production environment, just an idea and an example.

Building a log for your runbooks

Building a log for your runbooks

Recent Comments